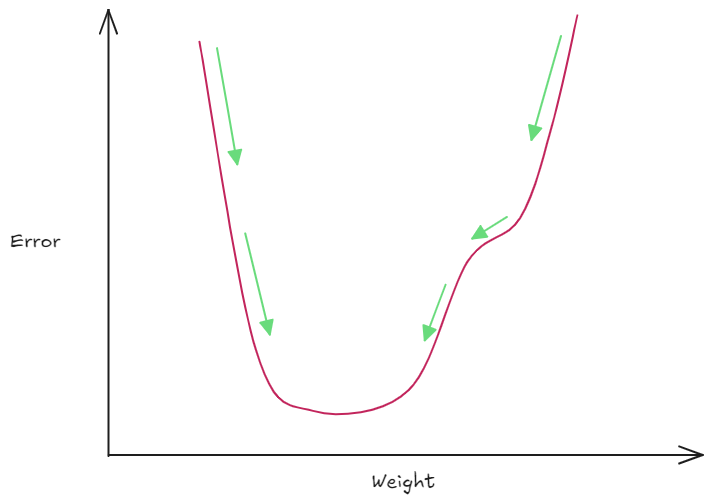

Gradient descent is an iterative optimization algorithm that minimizes a function by repeatedly taking small steps in the opposite direction of the function’s gradient.

If gradient was the slope, gradient descent would be descending down the slope.

Similar to Hill climbing