Abstract

Big-O is a way to describe the time or space complexity of algorithms. It expresses an upper bound of an algorithm’s time or space complexity, a measure of the “worst case scenario”.

Why (application/feasibility)

- allows programmers to compare different algorithms and choose the most efficient one

- helps in understanding scalability of algorithms and predicting how they will perform as input size grows

- enables developers to optimise code and improve performance

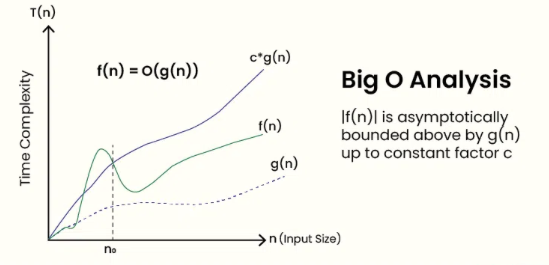

Formal Mathematical Definition

Given two functions and We say that if there exists constants and such that , for all . In other words, grows no faster than

How to find Big-O

- ignore lower order terms, consider highest order term (e.g. term with highest power)

- ignore constant (coefficient) associated with highest order term

- see Other Examples of Big-O for simple/difficult examples

Why we concatenate log terms

Say we had a program of Big-O of Let be a constant As is a constant coefficient, it can be taken out and ignored for Big-O notation. As such, the Big-O notation of any log term can be converted to have any base term. Thus the base term simply does not matter and can be ignored, and written as

Properties

-

reflexivity: for any function ,

-

transitivity: if and , then

-

nonzero constant factor: if , then

- constant multiplicative coefficients do not affect Big-O term

-

sum: if and , then .

- In other words, when combining complexities only the largest term dominates

-

product rule: if and , then

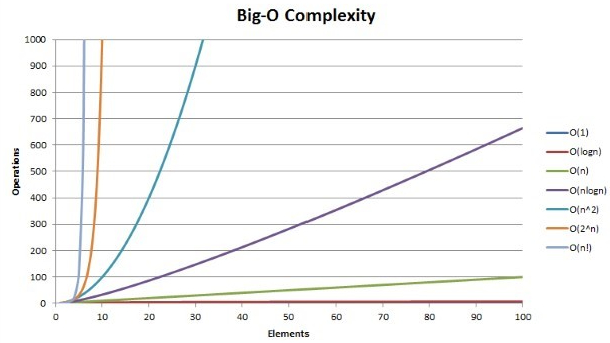

Relative size

Amortized time

Amortized time instead focuses on the average time to run, rather than the worst-case performance of the algorithm. Amortized time is determined by dividing the total running time, by the number of operations and is expressed in Big-O notation. For example, if an algorithm run an arbitrary number of times runs in , then the amortized time is .