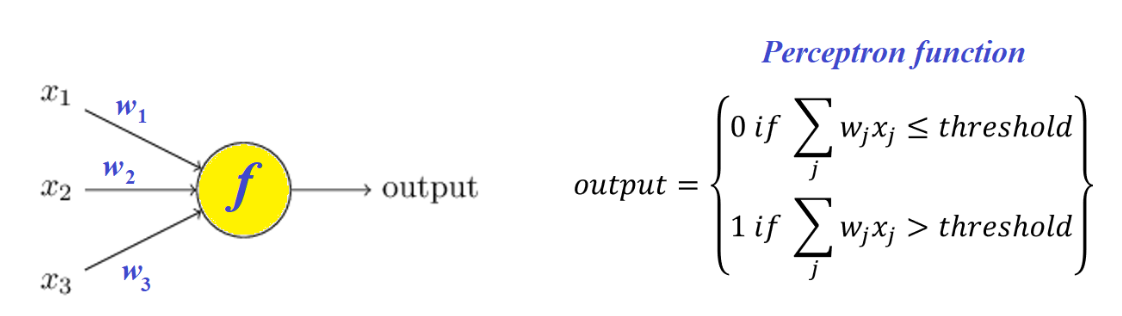

An artificial neuron. The simplest artificial neural network architecture, commonly used for binary classification. 1

Components

- features: each representing a characteristic of the input data.

- weights: each feature has a weight that determines its influence on an output. These weights are adjusted via training to find their optimal values.

- bias: an adjustable numerical term that is added to the weighted sum before the activation function is applied. This term is independent of the inputs, giving the perceptron more flexibility to fit more complex data.

- activation function: comparing weighted sum to a threshold to produce a binary output. This is often a Heaviside step function.

- summation function: function that calculates the weighted sum of its input

The general idea is that different weights will represent the importance of each input, and that the weighted sum should be greater than a threshold value before making a binary decision:

Perceptron Algorithm2

- Set a threshold value.

- Multiply all input features with their weights

- Sum all the results, giving the weighted sum

- Activate the output

Example

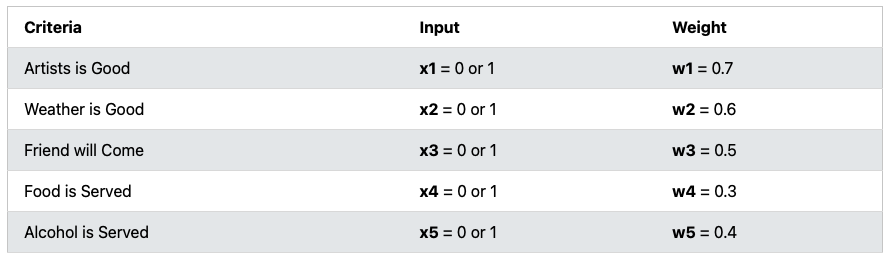

The perceptron decides if we should go to a concert. It will output “Yes” or “No”.

- Set a threshold value: 1.5

- Multiply all input features with its weights:

- …

- Sum the results: for example, (also known as the weighted sum)

- Activate output: since , return Yes.

Limitations

- limited to linearly separable problems

- requires labelled data for training Multi-layer Perceptron overcomes these limitations.